User Research for a Medical Association

We conducted stakeholder interviews and a thematic analysis.

PROJECT OVERVIEW

Client: An international association representing a large medical specialty (name omitted intentionally).

Task: To conduct research to better understand issues with the association’s current website and how staff interacts with it. Our findings would inform our decisions for the upcoming website redesign.

My role: User Researcher

Challenge: We had very little understanding of what pain points users were experiencing on the website (and why) and how staff updates the website on a regular basis.

Team: Robert Rust (project manager); Orion Kinkaid (UX, remote); Casey DenBleyker (designer, remote); Paige Howarth (account manager)

Duration: 6 weeks

the main gist

With the help of my team, I conducted user research with 46 stakeholders and a thematic analysis. We learned that the site navigation was overwhelming and that site search didn’t work well. Based on this research, we put together recommendations to inform the association’s upcoming website redesign.

MY PROCESS

Phase One:

Research Method Selection

We chose to conduct stakeholder interviews because we didn’t know why users were frustrated with the website and how staff interacted with the site.

A medical association hired us to conduct research to inform their upcoming website redesign. While we had analytics on how people used the association’s existing website, we didn’t quite know what frustrations they were experiencing and why.

For example, we knew that the bounce rate on the homepage for the past 12 months was 66%. But was that because people found what they were looking for without interacting with the page and left (a success)? Or was it because they didn’t see the information they wanted and left the page to search for it elsewhere (a failure)?

The other challenge was that we didn’t know how staff was using the website. The association is made up of 8 departments that all had unique ways of adding, deleting, and updating content.

To that end, we decided to start off our research by conducting stakeholder interviews with each of the association’s 8 internal departments. We wanted to see how each department used the website and what complaints they had heard about it from their users.

Phase Two:

Stakeholder Interview Preparation

We prepared for the 7 group interviews by writing a script and drafting customized questions for each department. We also created a hypothesis for what we’d find.

Leading up to the interviews, I created a plan for how we’d conduct each interview and what questions we’d ask. We decided to do group interviews with each department because we wouldn’t have time to interview each staff member individually.

I drafted between 10 and 20 questions for each department, making sure to customize them based on who we were talking to. Some of the questions we asked were:

What do you think are users’ main pain points on the website?

How do you interact with this website as part of your role?

How would you describe the association’s visual style or brand personality?

Who are the users you’re trying to attract to the website?

How often do you add, update, and delete content on the site?

We made sure to get the perspectives of senior-level staff, who had been at the organization for a long time and set the strategic vision, as well as junior staff, who were updating the website every day.

On our team, we divvied up our roles in this way:

UX (me) - led the interviews and asked questions

Project Manager (Robert) - took notes, set up technology

Account Manager (Paige) - kicked off the session and introduced the team

Before the interviews, I also set a hypothesis for what we’d learn from the stakeholders. I based this off of my personal opinions about the website, what the client team had told us in previous conversations, and Google Analytics data.

“The main pain points are going to be that the site is hard to navigate, visually overwhelming, and the content is disorganized and outdated. Most users will come to the website for events and resources. Staff does not have the proper processes in place to consistently update the website. ”

Phase Three: Conducting the Interviews

We conducted the 7 group interviews and talked to each department for 1 hour each over the course of 3 days. We made adjustments to our script and interview strategy after each session.

We conducted the interviews in-person over the course of 3 days, meeting with each of the 8 departments for 1 hour each. We found it helpful to be in the same room as the stakeholders so that we could read nonverbal cues, such as facial expressions and body language.

I led each of the 8 interviews with the help of my team. To kick off each interview, I began by explaining why we were there (“we’re redesigning the website and we want your input”), how the interviews were going to work, and a disclaimer:

“The format will be informal, interview-style questions, so please be as honest as possible. I’ll be taking notes, but your answers will remain anonymous. The interview will last about 60 minutes and will not be used to assess you or your job performance. There are no right or wrong answers.”

I used this disclaimer for two reasons. Because we were in a group environment, I was nervous that:

Some of the junior staff might not be fully honest about their impressions of the website, especially if their superior was also in the room. For that reason, I included the clause about the interview “not being used to assess you or your job performance.”

Groupthink may come into play, where some people may be hesitant to express their opinions if they go against the group consensus. For that reason, I included the clause “there are no right or wrong answers.”

Each interview ranged from 4 to 8 people. I had several team members taking notes so that I could focus on people’s responses and ask follow-up questions. After our first interview, I made a few adjustments based off my learnings:

It’s okay to go off script. I had written a preset list of questions and I initially stuck very closely to it. But as I got more comfortable, I went “off script” and asked follow-up questions based off of people’s responses, which proved to be very valuable.

Be okay with silence. There were a few times where I’d ask a question and no one would respond immediately. Instinctively, I rushed to clarify the question, but I later learned to pause, take a few moments, and let people think about their answer in silence.

Make an active effort to get quieter individuals involved. In some interviews, some people naturally talked more than others. In an attempt to get all staff members engaged, we decided to start each interview by asking participants to “state your name, your role, and one way that you think this project will be successful.” This was an easy way to break the ice and encourage contributions from quieter individuals.

I also learned a few things I would have done differently:

Set clearer expectations up front. There were a few instances where we asked staff how they interact with the website and they said, “I don’t use it at all.” In that circumstance, there wasn’t much information that we could get from those individuals (although we did follow up by asking why).

Record the sessions. We decided not to record the sessions from the interviews because we had several team members taking notes and we were wary of making the interviewees nervous. But as I was doing the analysis, I found that it would have been helpful to have a recording of the sessions to refer back to.

Phase Four: Thematic Analysis (AKA Affinity Diagramming or Mapping)

I conducted a thematic analysis to make sense of all of this qualitative data and uncover common themes between each department.

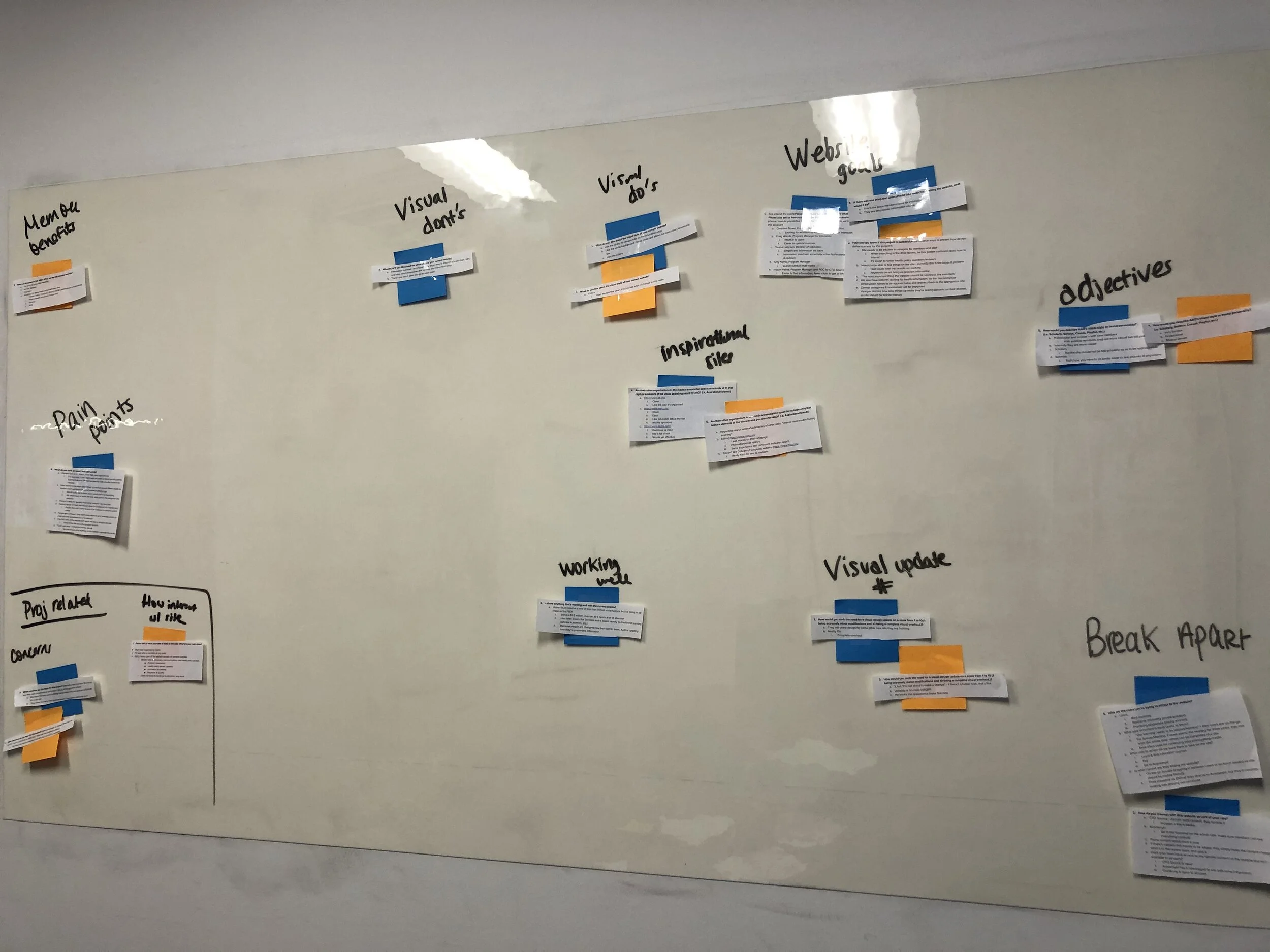

After we conducted the interviews, I had 50+ pages of notes to sort through! To make sense of all of this data, I decided to conduct a thematic analysis.

The thematic analysis allowed me to break down and organize the insights we obtained from the interviews and uncover common themes. I was able to see the similarities (and differences) between each department and visually see important relationships between different chunks of data.

To conduct the analysis, I followed these steps:

I started out by reading through all of the notes we had from each interview.

I cleaned up the notes by removing irrelevant answers and consolidating repeated information.

I then printed out all of our notes and cut them into individual sections so that each section contained one insight.

I then affixed each section onto a color-coded Post-it so that I knew which department had made that comment.

Finally, I put all of these Post-its onto a whiteboard.

I then grouped the responses by topic (e.g. website likes, website dislikes, website pain points, user groups, etc.). In this visual manner, I was able to see how each department responded to each question and identify common themes or inconsistencies.

Some of our key findings included:

The main pain points of the website were an overwhelming and confusing site navigation; the fact that the site didn’t speak to the association as a global, cutting-edge organization; and that site search didn’t work at all.

The main goals of the new website were to increase website traffic, increase membership, and increase attendance at events.

There was a bit of internal debate around how much content should be gated versus open to the public. Some departments wanted content to be free and public, while others argued that gated content was a unique benefit that only paying members should receive.

“We want people to be proud to be a member. We want them to know that we are the most trusted organization in this space.”

Rather than sending the client a long document, we decided to create a short presentation summarizing our key findings that could be disseminated throughout the organization. You can see some slides from the report below.

Phase Five: Confirmation of Findings

Data from a user survey done the year prior revealed that user pain points aligned with what we learned in our stakeholder interviews.

After the stakeholder interviews, I wanted to conduct user interviews and a user survey to see if the insights we obtained truly aligned with users’ pain points. However, due to other conflicting priorities, we were unable to get access to the association’s users. Luckily, they had conducted a survey the year before that turned out to have data that helped us confirm what we learned in the interviews.

18% of users said it was “disappointing” how easy it was to find what they were looking for on the website.

53% of users come to the website for continuing education credits.

11% of users rated the organization of the website as “disappointing.”

This aligned exactly with the information we got while conducting the stakeholder interviews. It also turned out that our initial hypothesis was mostly correct! We were right about users’ pain points, but were wrong about why most users come to the website.

RESULTS

TOP TAKEAWAYS

Read some of our key findings (anchor link). Based off of the insights we learned, we made the following recommendations:

Reorganize the main navigation so that users can find what they are looking for

Fix search so that it pulls relevant results

Use content and design to position the association as a global organization

I loved working on this project because it was one of the first times where I had a budget dedicated solely to user research. Since we weren’t on a strict timeline, I was able to ask a lot of questions…and then ask more follow-up questions.

Read the main things I learned (anchor link) about conducting stakeholder interviews.

Want more?

City Parks Alliance was a project where I focused on user research, content strategy, and UX design.